The journey toward fully automated vehicles hinges on the deployment of advanced sensing technologies, especially the critical role played by autonomous driving sensors that enable vehicles to perceive and react to their surroundings in real time. These sensors—including LiDAR, radar, cameras and ultrasonic devices—form the perception backbone of self-driving systems by detecting objects, mapping terrain and supporting decision-making for control systems. As automakers ramp up development of advanced driver assistance systems and autonomous vehicles, the sensor suite becomes a key differentiator in safety, performance and reliability.

Modern autonomous driving sensors are evolving rapidly in both capability and integration. LiDAR (light detection and ranging) sensors produce high-resolution 3D point clouds to precisely map obstacles and road features while cameras provide colour and texture information that helps with object classification and lane detection. Radar sensors excel in long-range detection and operate reliably in adverse weather conditions whereas ultrasonic sensors cover near-field detection at low speeds such as parking or close-proximity maneuvers. Sensor fusion—the combining of data from multiple sensing modalities—is increasingly vital to ensure robustness, redundancy and worst-case scenario handling under real-world conditions.

The adoption of autonomous driving sensors is being accelerated by multiple industry trends. Safety regulations and vision-zero initiatives are pushing automakers to equip vehicles with higher levels of driver-assist capabilities, which in turn require more advanced sensing hardware. Meanwhile the declining cost of sensors—driven by economies of scale, improved manufacturing and solid-state designs—is making it more feasible to deploy such sensors beyond premium models into mass-market vehicles. In parallel, the growth of electric vehicles provides an architecture that is well aligned with the high-voltage electronics and computing platforms required for sensor processing and autonomous-capable systems.

From a technological standpoint the shift toward solid-state LiDAR is a game changer: without moving parts these sensors offer greater durability, form-factor flexibility and cost reduction, making them better suited for automotive integration. Enhancements in sensor resolution, range, frame rate and environmental robustness allow vehicles to detect smaller and more distant objects, respond faster and handle challenging lighting or weather. Real-time on-board processing with embedded AI/ML algorithms enables quicker interpretation of sensor input and faster decision cycles, essential for higher levels of automation. Additionally the packaging and placement of sensors within vehicles is evolving: sensors are now being hidden behind bumpers, integrated into headlamps, roof modules or within the bonnet to preserve vehicle aesthetics, aerodynamics and pedestrian safety.

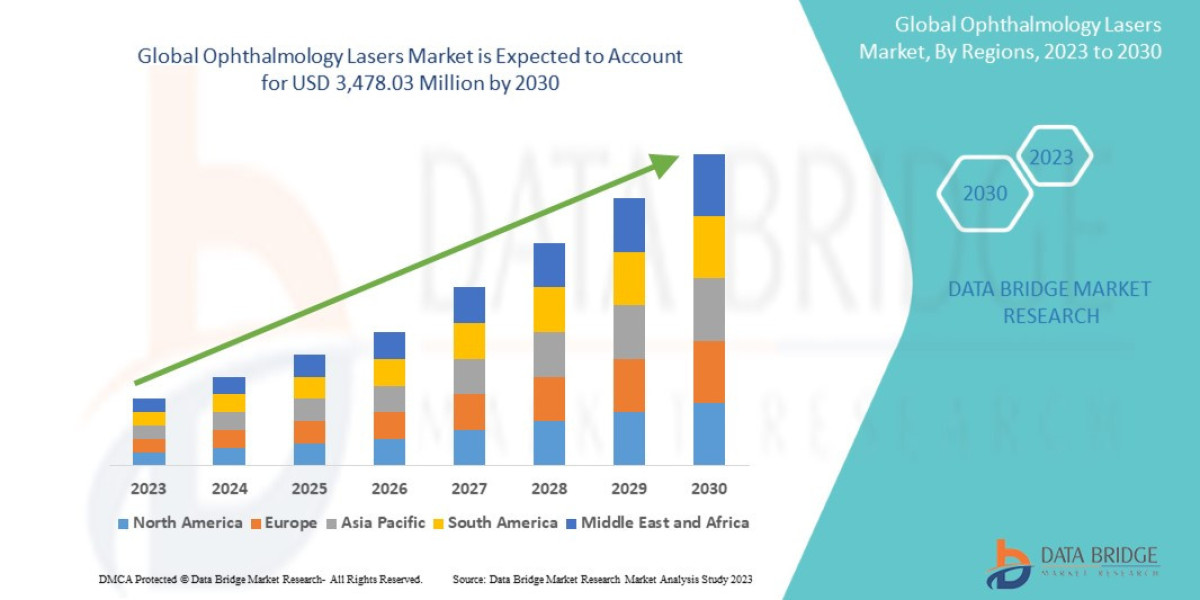

Regional dynamics for autonomous driving sensors present an interesting picture. North America continues to lead in terms of R&D investment, early deployment of ADAS (Advanced Driver Assistance Systems) and regulatory momentum toward higher automation lanes. Europe follows closely with strong regulatory frameworks, stringent safety standards and rising consumer demand for autonomous features. The Asia-Pacific region is emerging rapidly owing to high vehicle production volumes, strong automotive manufacturing ecosystems, and government support for smart mobility and autonomous transport initiatives. Meanwhile other regions such as Latin America and Middle East & Africa are progressively preparing for sensor adoption though infrastructure, cost and regulation remain inhibitors.

As vehicles move through SAE levels of automation from Level 1 and Level 2 driver assistance toward Level 4 or Level 5 full autonomy the dependence on reliable sensing escalates. The sensor suite must deliver near-flawless performance, fault-tolerance, and cover worst-case scenarios to achieve consumer trust and regulatory approval. Furthermore manufacturers are exploring over-the-air updates, remote diagnostics and sensor health monitoring to ensure that sensor systems remain accurate and functional throughout the vehicle lifetime. For the consumer the benefits are tangible: improved safety, reduced accidents, enhanced convenience through features like automated lane-keeping or self-parking, and eventually the promise of vehicles that drive themselves.

Frequently Asked Questions (FAQs)

1. What types of sensors are used in autonomous driving systems and how do they complement each other?

Autonomous driving systems typically use LiDAR for 3D mapping, cameras for visual recognition, radar for long-range detection and ultrasonic sensors for near-field proximity. These sensors complement each other by providing different types of data—LiDAR gives depth, cameras give colour and texture, radar adds resilience in poor weather and ultrasonic helps with close-quarters manoeuvres. Combined they provide a richer, more reliable picture of the vehicle’s surroundings.

2. Why is sensor fusion important in autonomous driving and what are its benefits?

Sensor fusion merges data from different sensors to produce a more accurate, robust and resilient perception of the driving environment. Benefits include improved object detection and classification, redundancy in case one sensor fails or is obstructed, smoother decision making, and stronger performance under diverse conditions such as low light, rain or snow. Fusion helps the driving system act more reliably and safely.

3. What are the main challenges limiting widespread adoption of autonomous driving sensors?

Key challenges include cost—high-performance sensors such as LiDAR remain expensive especially for mass-market vehicles—environmental robustness (sensors must work in rain, fog, snow, glare), calibration and maintenance issues, reliability and safety certification under automotive standards, as well as data processing demands and system integration. Infrastructure and regulatory frameworks must also evolve to support higher levels of autonomy.

In summary autonomous driving sensors represent one of the most critical technological foundations of future mobility. They turn vehicles into aware machines capable of navigating, interpreting and reacting to the world around them with minimal or no human intervention. As cost declines, sensor performance improves and regulation aligns toward higher automation the sensor ecosystem will become ever more mainstream. Automakers, suppliers and tech firms that innovate in sensing, integration and data processing stand to lead the next chapter of smart, autonomous vehicles.

More Related Report

Automotive Low Emission Vehicle Market Share

Smart Fleet Management Market Share